Gwangju Institute of Science and Technology (GIST)

Gwangju Institute of Science and Technology (GIST) I am a Ph.D. student in Human Computer Interaction (HCI) at the Gwangju Institute of Science and Technology (GIST).

I obtained my Bachelor's Degree in Electrical Engineering and Computer Science from GIST, and I'm currently working on my Master's and Ph.D. Intergrated course on HCI at GIST.

My research interests focus on Human-AI Interaction, Generative AI and Creativity Support.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Gwangju Institute of Science and Technology (GIST)Ph.D. Student in Human Computer InteractionMar. 2021 - present

-

Gwangju Institute of Science and Technology (GIST)B.S. in Electrical Engineering and Computer Science

Magna Cum LaudeMar. 2017 - Feb. 2021

Honors & Awards

-

GIST-DH Global Startups Idea Contest - 3rd PlaceJun. 2022

-

GIST Best paper AwardFeb. 2021

-

National Excellence Scholarship for Science EngineeringApr. 2019 – Feb. 2021

-

Honor Scholarship (Awarded for the students with high GPA)2017, 2018

Selected Publications (view all )

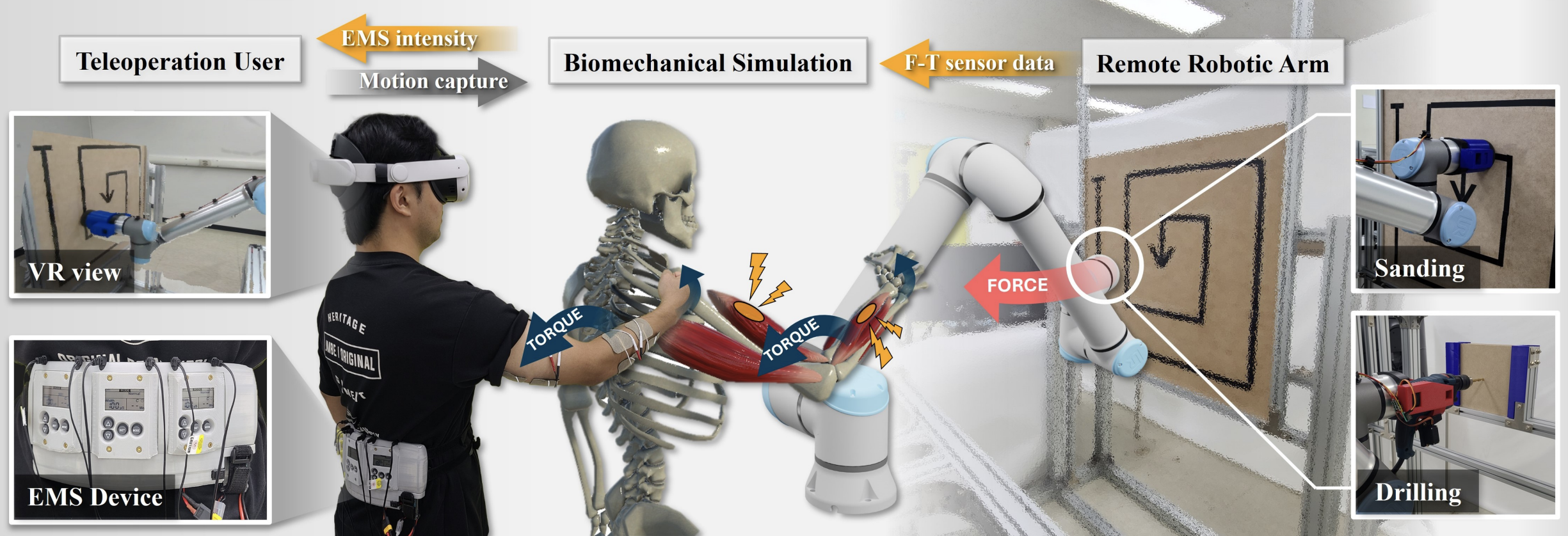

TelePulse: Enhancing the Teleoperation Experience through Biomechanical Simulation-Based Electrical Muscle Stimulation in Virtual Reality

Seokhyun Hwang *, Seongjun Kang *, Jeongseok Oh *, Jeongju Park, Semoo Shin, Yiyue Luo, Joseph DelPreto, Sangbeom Lee, Kyoobin Lee, Wojciech Matusik, Daniela Rus, SeungJun Kim (* equal contribution)

CHI '25: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems. 1st author

This paper introduces TelePulse, a system integrating biomechanical simulation with electrical muscle stimulation (EMS) to provide precise haptic feedback for teleoperation tasks in virtual reality. TelePulse comprises two key components: a physical simulation module that calculates joint torques based on real-time force data, and an EMS module that converts these torques into muscle stimulation. Two experiments were conducted to evaluate the system. In the first experiment, we assessed the accuracy of EMS signals generated through biomechanical simulations by comparing them with electromyography (EMG) data during force-directed tasks. The results demonstrated that TelePulse provided muscle stimulation patterns closely resembling natural muscle activations. In the second experiment, we evaluated the impact of TelePulse on teleoperation performance across continuous and impulse force tasks, showing significant improvements in task accuracy and presence compared to conventional EMS systems. The results suggest that TelePulse's biomechanical EMS enhances teleoperation by providing realistic force feedback in virtual environments.

TelePulse: Enhancing the Teleoperation Experience through Biomechanical Simulation-Based Electrical Muscle Stimulation in Virtual Reality

Seokhyun Hwang *, Seongjun Kang *, Jeongseok Oh *, Jeongju Park, Semoo Shin, Yiyue Luo, Joseph DelPreto, Sangbeom Lee, Kyoobin Lee, Wojciech Matusik, Daniela Rus, SeungJun Kim (* equal contribution)

CHI '25: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems 1st author

This paper introduces TelePulse, a system integrating biomechanical simulation with electrical muscle stimulation (EMS) to provide precise haptic feedback for teleoperation tasks in virtual reality. TelePulse comprises two key components: a physical simulation module that calculates joint torques based on real-time force data, and an EMS module that converts these torques into muscle stimulation. Two experiments were conducted to evaluate the system. In the first experiment, we assessed the accuracy of EMS signals generated through biomechanical simulations by comparing them with electromyography (EMG) data during force-directed tasks. The results demonstrated that TelePulse provided muscle stimulation patterns closely resembling natural muscle activations. In the second experiment, we evaluated the impact of TelePulse on teleoperation performance across continuous and impulse force tasks, showing significant improvements in task accuracy and presence compared to conventional EMS systems. The results suggest that TelePulse's biomechanical EMS enhances teleoperation by providing realistic force feedback in virtual environments.

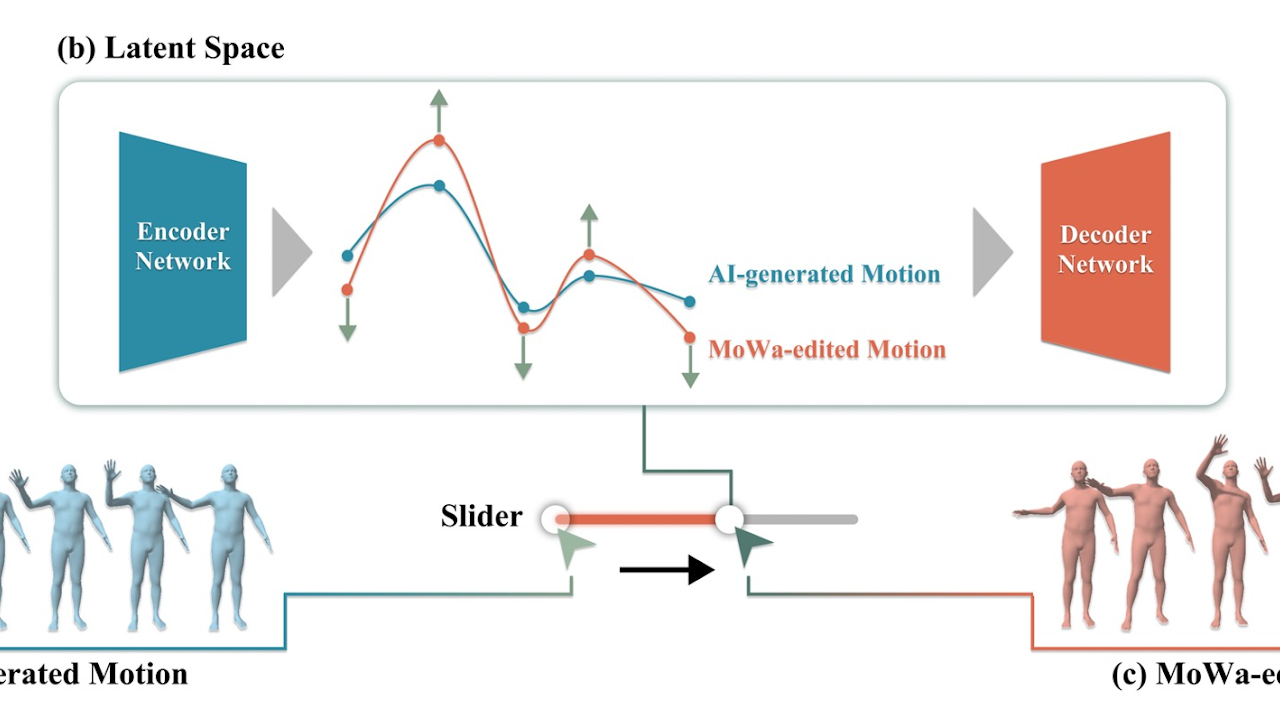

MoWa: An Authoring Tool for Refining AI-Generated Avatar Motion through Latent Waveform Manipulation

CHI '25: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems. 1st author

Creating expressive and realistic motion animations is a challenging task. Generative artificial intelligence (AI) models have emerged to address this challenge, offering the capability to synthesize motion animations from text prompts. However, the effective integration of AI-generated motion into the workflows of professional designers remains uncertain. This study proposes MoWa, an authoring tool designed to refine AI-generated motions to meet professional standards. A formative study with six professional motion designers identified the strengths and weaknesses of AI-generated motions. To address these weaknesses, MoWa utilizes latent space to enhance the expressiveness of motions, making them suitable for use in professional workflows. A user study involving twelve professional motion designers was conducted to evaluate MoWa's effectiveness in refining AI-generated motions. The results indicated that MoWa streamlines the motion design process and improves the quality of the outcomes. These findings suggest that incorporating latent space into motion design tasks can enhance efficiency.

MoWa: An Authoring Tool for Refining AI-Generated Avatar Motion through Latent Waveform Manipulation

CHI '25: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems 1st author

Creating expressive and realistic motion animations is a challenging task. Generative artificial intelligence (AI) models have emerged to address this challenge, offering the capability to synthesize motion animations from text prompts. However, the effective integration of AI-generated motion into the workflows of professional designers remains uncertain. This study proposes MoWa, an authoring tool designed to refine AI-generated motions to meet professional standards. A formative study with six professional motion designers identified the strengths and weaknesses of AI-generated motions. To address these weaknesses, MoWa utilizes latent space to enhance the expressiveness of motions, making them suitable for use in professional workflows. A user study involving twelve professional motion designers was conducted to evaluate MoWa's effectiveness in refining AI-generated motions. The results indicated that MoWa streamlines the motion design process and improves the quality of the outcomes. These findings suggest that incorporating latent space into motion design tasks can enhance efficiency.

LumiMood: A Creativity Support Tool for Designing the Mood of a 3D Scene

Jeongseok Oh, Seungju Kim, SeungJun Kim

CHI '24: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems. No.174 11. May. 2024. 1-21. 1st author

The aesthetic design of 3D scenes in game content enhances players’ experience by inducing desired emotions. Creating emotionally engaging scenes involves designing low-level features, such as color distribution, contrast, and brightness. This study presents LumiMood, an AI-driven creativity support tool (CST) that automatically adjusts lighting and post-processing to create moods for 3D scenes. LumiMood supports designers by synthesizing reference images, creating mood templates, and providing intermediate design steps. Our formative study with 10 designers identified distinct challenges in mood design based on the participants’ experience levels. A user study involving 40 designers revealed that using LumiMood benefits the designers by streamlining workflow, improving precision, and increasing mood intention accuracy. Results indicate that LumiMood supports clarifying mood concepts and improves interpretation of lighting and post-processing, thus resolving the challenges. We observe the effect of template based designing and discuss considerable factors for AI-driven CSTs for users with varying levels of experiences.

LumiMood: A Creativity Support Tool for Designing the Mood of a 3D Scene

Jeongseok Oh, Seungju Kim, SeungJun Kim

CHI '24: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems 11. May. 2024. 1st author

The aesthetic design of 3D scenes in game content enhances players’ experience by inducing desired emotions. Creating emotionally engaging scenes involves designing low-level features, such as color distribution, contrast, and brightness. This study presents LumiMood, an AI-driven creativity support tool (CST) that automatically adjusts lighting and post-processing to create moods for 3D scenes. LumiMood supports designers by synthesizing reference images, creating mood templates, and providing intermediate design steps. Our formative study with 10 designers identified distinct challenges in mood design based on the participants’ experience levels. A user study involving 40 designers revealed that using LumiMood benefits the designers by streamlining workflow, improving precision, and increasing mood intention accuracy. Results indicate that LumiMood supports clarifying mood concepts and improves interpretation of lighting and post-processing, thus resolving the challenges. We observe the effect of template based designing and discuss considerable factors for AI-driven CSTs for users with varying levels of experiences.

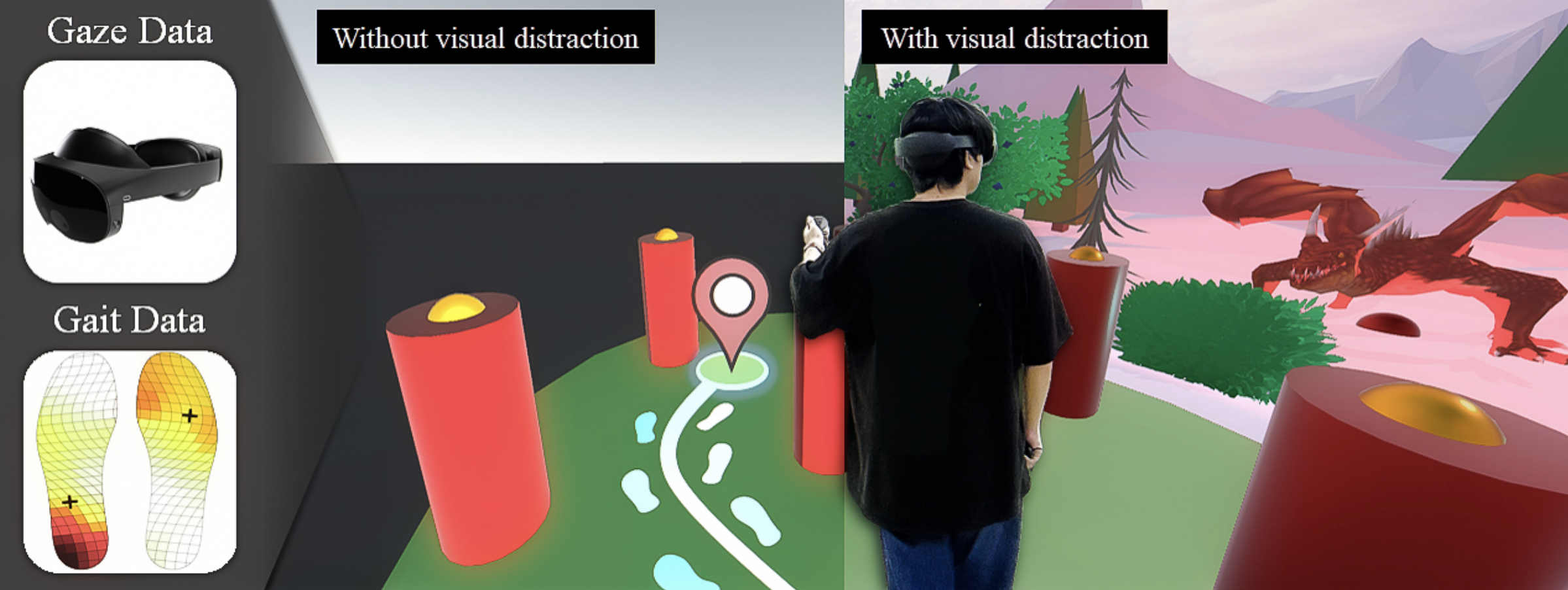

GaitWay: Gait Data-Based VR Locomotion Prediction System Robust to Visual Distraction

Seokhyun Hwang, YongIn Kim, Jeongseok Oh, SeungJun Kim

CHI EA '24: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems. No.173 11. May. 2024. 1-8.

In VR environments, user’s sense of presence is enhanced through natural locomotion. Redirected Walking (RDW) technology can provide a wider walking area by manipulating the trajectory of the user. Considering that the user’s future position enables a broader application of RDW, research has utilized gaze data combined with past positions to reduce prediction errors. However, in VR content that are replete with creatures and decorations, gaze dispersion may deteriorate the data quality. Thus, we propose an alternative system that utilizes gait data, GaitWay, which correlates directly to user locomotion. This study involved 11 participants navigating a visually distracting three-tiered VR environment while performing designated tasks. We employed a long short-term memory network for GaitWay to forecast positions two seconds ahead and evaluated the prediction accuracy. The findings demonstrated that incorporating gaze data significantly increased errors in highly-distracted settings, whereas GaitWay consistently reduced errors, regardless of the environmental complexity.

GaitWay: Gait Data-Based VR Locomotion Prediction System Robust to Visual Distraction

Seokhyun Hwang, YongIn Kim, Jeongseok Oh, SeungJun Kim

CHI EA '24: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems 11. May. 2024.

In VR environments, user’s sense of presence is enhanced through natural locomotion. Redirected Walking (RDW) technology can provide a wider walking area by manipulating the trajectory of the user. Considering that the user’s future position enables a broader application of RDW, research has utilized gaze data combined with past positions to reduce prediction errors. However, in VR content that are replete with creatures and decorations, gaze dispersion may deteriorate the data quality. Thus, we propose an alternative system that utilizes gait data, GaitWay, which correlates directly to user locomotion. This study involved 11 participants navigating a visually distracting three-tiered VR environment while performing designated tasks. We employed a long short-term memory network for GaitWay to forecast positions two seconds ahead and evaluated the prediction accuracy. The findings demonstrated that incorporating gaze data significantly increased errors in highly-distracted settings, whereas GaitWay consistently reduced errors, regardless of the environmental complexity.

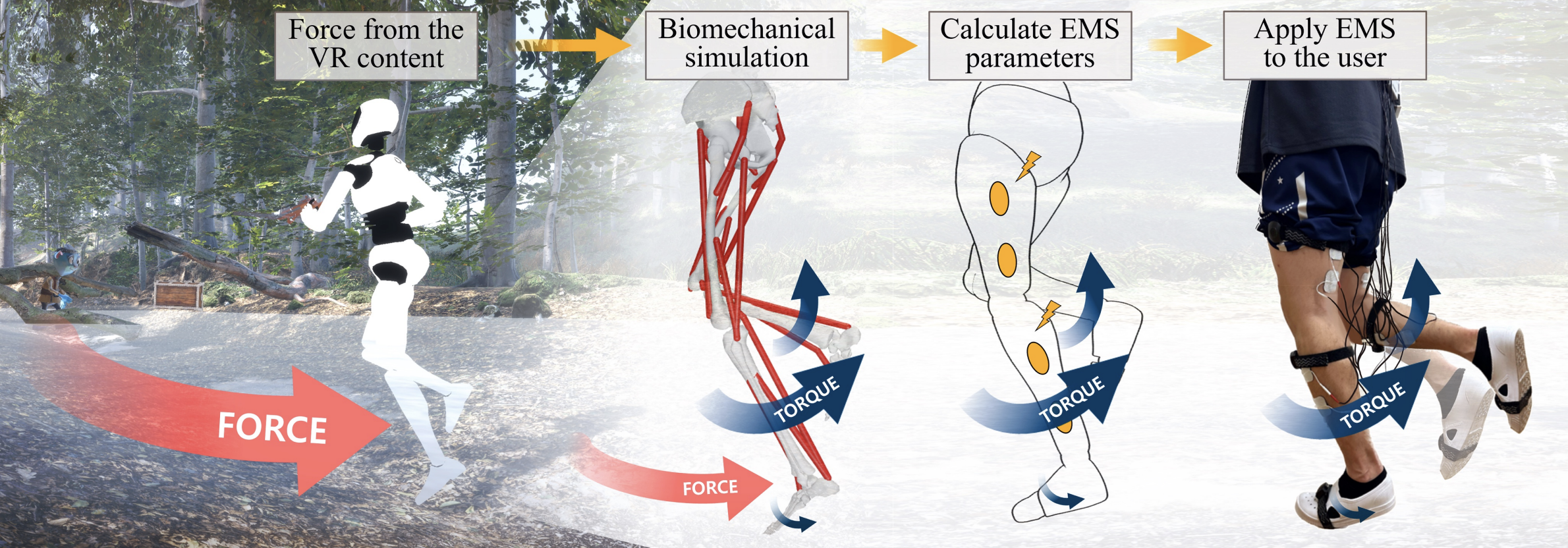

ErgoPulse: Electrifying Your Lower Body With Biomechanical Simulation-based Electrical Muscle Stimulation Haptic System in Virtual Reality

Seokhyun Hwang, Jeongseok Oh, Seongjun Kang, Minwoo Seong, Ahmed Elsharkawy, SeungJun Kim

CHI '24: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems. No.416 11. May. 2024. 1-21. (Honorable Mention 🏆)

This study presents ErgoPulse, a system that integrates biomechanical simulation with electrical muscle stimulation (EMS) to provide kinesthetic force feedback to the lower-body in virtual reality (VR). ErgoPulse features two main parts: a biomechanical simulation part that calculates the lower-body joint torques to replicate forces from VR environments, and an EMS part that translates torques into muscle stimulations. In the first experiment, we assessed users’ ability to discern haptic force intensity and direction, and observed variations in perceived resolution based on force direction. The second experiment evaluated ErgoPulse’s ability to increase haptic force accuracy and user presence in both continuous and impulse force VR game environments. The experimental results showed that ErgoPulse’s biomechanical simulation increased the accuracy of force delivery compared to traditional EMS, enhancing the overall user presence. Furthermore, the interviews proposed improvements to the haptic experience by integrating additional stimuli such as temperature, skin stretch, and impact.

ErgoPulse: Electrifying Your Lower Body With Biomechanical Simulation-based Electrical Muscle Stimulation Haptic System in Virtual Reality

Seokhyun Hwang, Jeongseok Oh, Seongjun Kang, Minwoo Seong, Ahmed Elsharkawy, SeungJun Kim

CHI '24: Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems 11. May. 2024.

This study presents ErgoPulse, a system that integrates biomechanical simulation with electrical muscle stimulation (EMS) to provide kinesthetic force feedback to the lower-body in virtual reality (VR). ErgoPulse features two main parts: a biomechanical simulation part that calculates the lower-body joint torques to replicate forces from VR environments, and an EMS part that translates torques into muscle stimulations. In the first experiment, we assessed users’ ability to discern haptic force intensity and direction, and observed variations in perceived resolution based on force direction. The second experiment evaluated ErgoPulse’s ability to increase haptic force accuracy and user presence in both continuous and impulse force VR game environments. The experimental results showed that ErgoPulse’s biomechanical simulation increased the accuracy of force delivery compared to traditional EMS, enhancing the overall user presence. Furthermore, the interviews proposed improvements to the haptic experience by integrating additional stimuli such as temperature, skin stretch, and impact.

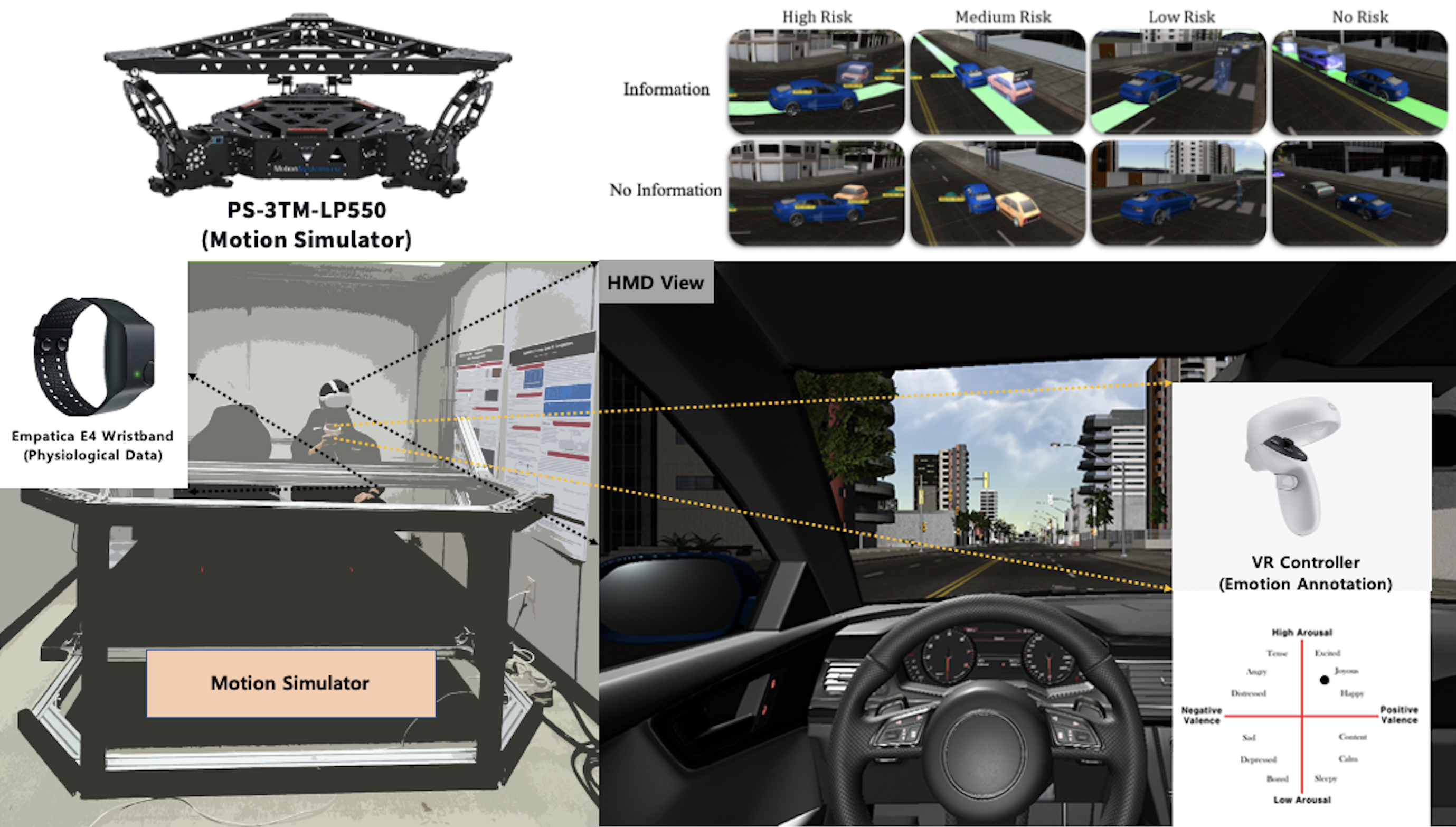

Assessing the Impact of AR HUDs and Risk Level on User Experience in Self-Driving Cars: Results from a Realistic Driving Simulation

Seungju Kim *, Jeongseok Oh *, Minwoo Seong *, Eunki Jeon, Yeon-Kug Mood, SeungJun Kim (* equal contribution)

Appl. Sci. 2023. Vol.13 No.8 3. Mar. 2023. 4952 1st author

The adoption of self-driving technologies requires addressing public concerns about their reliability and trustworthiness. To understand how user experience in self-driving vehicles is influenced by the level of risk and head-up display (HUD) information, using virtual reality (VR) and a motion simulator, we simulated risky situations including accidents with HUD information provided under different conditions. The findings revealed how HUD information related to the immediate environment and the accident’s severity influenced the user experience (UX). Further, we investigated galvanic skin response (GSR) and self-reported emotion (Valence and Arousal) annotation data and analyzed correlations between them. The results indicate significant differences and correlations between GSR data and self-reported annotation data depending on the level of risk and whether or not information was provisioned through HUD. Hence, VR simulations combined with motion platforms can be used to observe the UX (trust, perceived safety, situation awareness, immersion and presence, and reaction to events) of self-driving vehicles while controlling the road conditions such as risky situations. Our results indicate that HUD information provision significantly increases trust and situation awareness of the users, thus improving the user experience in self-driving vehicles.

Assessing the Impact of AR HUDs and Risk Level on User Experience in Self-Driving Cars: Results from a Realistic Driving Simulation

Seungju Kim *, Jeongseok Oh *, Minwoo Seong *, Eunki Jeon, Yeon-Kug Mood, SeungJun Kim (* equal contribution)

Appl. Sci. 2023 3. Mar. 2023. 1st author

The adoption of self-driving technologies requires addressing public concerns about their reliability and trustworthiness. To understand how user experience in self-driving vehicles is influenced by the level of risk and head-up display (HUD) information, using virtual reality (VR) and a motion simulator, we simulated risky situations including accidents with HUD information provided under different conditions. The findings revealed how HUD information related to the immediate environment and the accident’s severity influenced the user experience (UX). Further, we investigated galvanic skin response (GSR) and self-reported emotion (Valence and Arousal) annotation data and analyzed correlations between them. The results indicate significant differences and correlations between GSR data and self-reported annotation data depending on the level of risk and whether or not information was provisioned through HUD. Hence, VR simulations combined with motion platforms can be used to observe the UX (trust, perceived safety, situation awareness, immersion and presence, and reaction to events) of self-driving vehicles while controlling the road conditions such as risky situations. Our results indicate that HUD information provision significantly increases trust and situation awareness of the users, thus improving the user experience in self-driving vehicles.

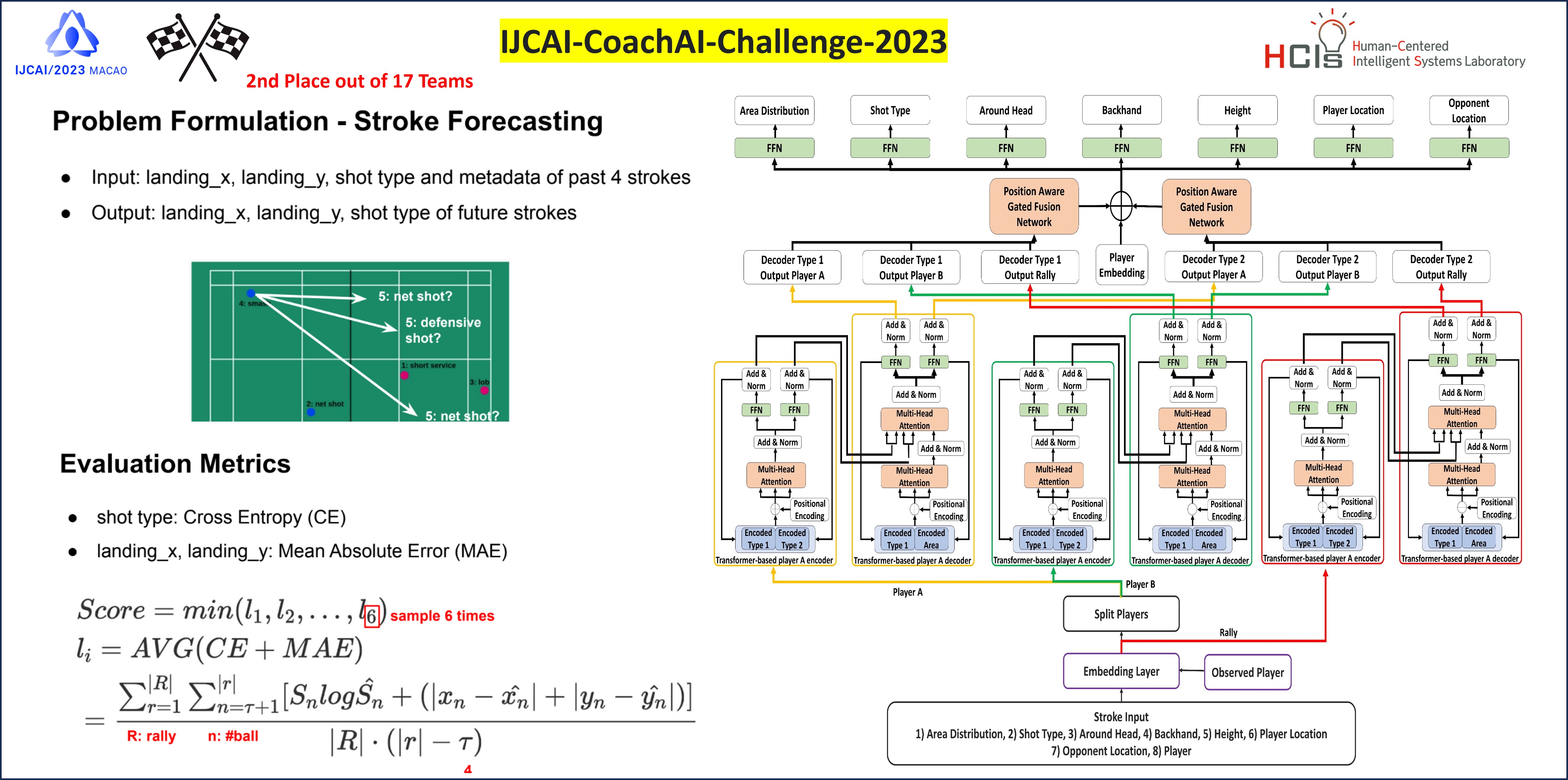

MuLMINet: Multi-Layer Multi-Input Transformer Network with Weighted Loss

Minwoo Seong *, Jeongseok Oh *, SeungJun Kim (* equal contribution)

International Joint Conference on Artificial Intelligence (IJCAI) CoachAI Badminton Challenge Technical Report (2nd Place 🏆). 1st author

The increasing use of artificial intelligence (AI) technology in turn-based sports, such as badminton, has sparked significant interest in evaluating strategies through the analysis of match video data. Predicting future shots based on past ones plays a vital role in coaching and strategic planning. In this study, we present a Multi-Layer Multi-Input Transformer Network (MuLMINet) that leverages professional badminton player match data to accurately predict future shot types and area coordinates. Our approach resulted in achieving the runner-up (2nd place) in the IJCAI CoachAI Badminton Challenge 2023, Track 2. To facilitate further research, we have made our code publicly accessible online, contributing to the broader research community’s knowledge and advancements in the field of AI-assisted sports analysis.

MuLMINet: Multi-Layer Multi-Input Transformer Network with Weighted Loss

Minwoo Seong *, Jeongseok Oh *, SeungJun Kim (* equal contribution)

International Joint Conference on Artificial Intelligence (IJCAI) CoachAI Badminton Challenge Technical Report (2nd Place 🏆) 1st author

The increasing use of artificial intelligence (AI) technology in turn-based sports, such as badminton, has sparked significant interest in evaluating strategies through the analysis of match video data. Predicting future shots based on past ones plays a vital role in coaching and strategic planning. In this study, we present a Multi-Layer Multi-Input Transformer Network (MuLMINet) that leverages professional badminton player match data to accurately predict future shot types and area coordinates. Our approach resulted in achieving the runner-up (2nd place) in the IJCAI CoachAI Badminton Challenge 2023, Track 2. To facilitate further research, we have made our code publicly accessible online, contributing to the broader research community’s knowledge and advancements in the field of AI-assisted sports analysis.